November 3, 2023

Our Applications are Moving House

Blog by Will Young, Solution Architect, UnderwriteMe

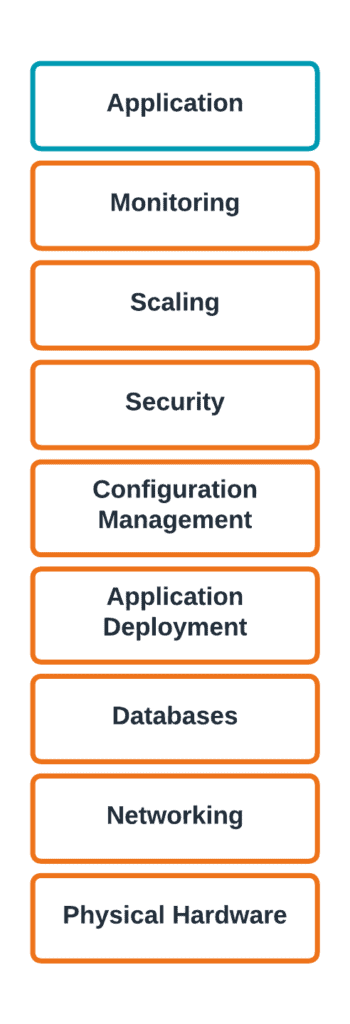

IT infrastructure is something that’s working best when clients don’t know it’s there. That’s because it’s usually invisible to clients, unless it breaks, in which case it becomes extremely visible. That’s because it provides all of the services that an application uses. Without infrastructure, an application wouldn’t have a server to run on, a network to connect to, or a place to store any of its data. If the infrastructure goes down, the whole application goes down. So it’s important.

At UnderwriteMe, we’re coming towards the end of a multi-year project to replace our entire infrastructure platform by containerising our applications (see my previous blog post). Almost every team has been involved, with the aim of delivering a faster, more reliable service for our clients. The platform isn’t a single service, it’s shorthand for a set of services, networking, and even physical hardware that our applications run on. These services allow us do things like deploy a new version of the application, or monitor how that the application is responding quickly to user activity. The application itself sits on top of all of these.

We regularly upgrade clients to newer versions of our applications, but migrating to the new platform is much more complex than a typical upgrade. With a normal upgrade, we leave all of the hardware as-is, and just deploy a new version of the application onto the server. With migrations, we are moving applications to a completely new set of infrastructure which is managed in a new, more modern way (see my previous blog post).

In order to successfully complete a migration we need to create a copy of all resources, application, data, configuration etc in the new platform and verify that this behaves in exactly the same way as the current application does in the old platform. Once this is done we will then “cut-over“ to the new platform.

To begin this process we deploy the application into the new platform so it can run in parallel with the current live application. We’ll keep it running like this until after the migration completes. By creating the new environment long before the release, we’re able to confirm that connectivity and other potential issues are all resolved well before we switch over to the new version of the application. Once the new environment is created and the application deployed, we need to copy the data from the old environment to the new one. We store our data inside a database, which organises data into a set of spreadsheet-like tables. We cannot easily move the database from the old platform to the new platform, so we have to copy the data and then run a comprehensive suite of tests to validate it in the new database. We follow a comprehensive playbook to make sure that no customer data is accidentally changed or lost in the migration. When we copy the data, we will also migrate any other application data, such as editor users, rules in place etc so users won’t see any change from the old environment.

Once the data is migrated we execute a series of tests which run to verify that the outputs of the engine are exactly the same in both platforms.

The data copy is actually done twice. The first is before a client ever sees the new environment. We copy the customer’s data the first time during the day, and based on a recent backup. This lets us know how long it will take to execute the copy as well as confirming that the new version of our applications will handle the older backup. We then work with clients to make sure that the engine is perfectly integrated with their infrastructure. Finally, we do a second copy of the data, right before go-live. This second copy is usually done overnight, and ensures that all cases created that day will appear in the new engines and editor.

This is a technique known as a blue-green deployment.

Because the server and the way it’s hosted is changing, the new server will have a different URL and certificate. We use certificates along with whitelisting to ensure that only the right people have access to the UE. With the old platform, we had a manual process to generate certificates. This worked, but relied on consistently documenting how each certificate was issued and the purpose. This is prone to error and time consuming, so in the new platform, the certificates are automatically generated and managed. This automatically creates an audit log, so we can see who was given access and when. We’re also changing the naming convention for our URLs, and keeping the pattern consistent across customer installations.

These changes will help us deliver a more performant and reliable service as well as helping to deliver new features faster. It’s an infrastructure change so you won’t even notice. And that’s a good thing.